Having generated a Cloud-Optimized GeoTiff (COG) file containing our NDVI calculations, we are now ready to return to our redlining data and determine if indeed we still see the legacy of those racist practices more than 50 years after they were outlawed.

The aggregation of raster pixels grouped by their containing polygons is something like the spatial equivalent of our .group_by() + .aggregate() operations in ibis, where the raster defines the values and the polygons define the groups.

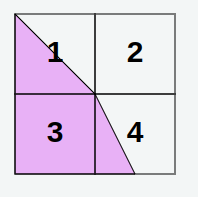

To compute the average NDVI in each polygon, we will introduce yet another module -- exactextract. This package can efficiently compute summary statistics for each polygon given a raster image. While several different modules can perform this kind of aggregation, exactextract is quite fast and scalable, using an efficient algorithm in C++, and gets it’s name for an ability to account for fractional coverage of a raster cell by a polygon, like so.

from exactextract import exact_extract

import ibis

from ibis import _

We begin as before by grabbing our remote city data. Here we have added an additional filter to focus only on residental data, as non-residental sites are not graded even though they are still marked on the map.

con = ibis.duckdb.connect(extensions=["spatial"])

redlines = (

con

.read_geo("/vsicurl/https://dsl.richmond.edu/panorama/redlining/static/mappinginequality.gpkg")

.filter(_.city == "New Haven", _.residential)

)

Spatial operations such as overlaying polygons on the raster requires that the two datasets have matching coordinate reference systems. While we have already transformed our raster data to the ‘longitude, latitude’ coordinates, EPSG:4326, we need to manually add this annotation to declare that our parquet data is already using this projection as well. We do this with .set_crs() option on geopandas object:

city = redlines.execute().set_crs("EPSG:4326")The exact_extract documentation shows this is a flexible method able to take a raster and vector input and compute a range of summary statistics. The method is also somewhat flexible in how it formats the output -- here we indicate we want the output formulated as a ‘geopandas’ object, keeping the geometry column and other useful columns from the original input data.

city_stats = exact_extract("ndvi.tif",

city,

["mean"],

include_geom = True,

include_cols=["label", "grade", "city", "fill"],

output="pandas")

city_stats.head()We will write out this updated file as a parquet dataset. This provides both a mechanism to store our completed calculation and an easy way to go back from geopandas into ibis.

city_stats.to_parquet("new_haven_stats.parquet")We can bring our data back into ibis, where we can do our familiar manipulations.

city = con.read_parquet("new_haven_stats.parquet")and we are finally ready to summarize the data to determine if the spectre of redlining remains: on average, are A grades still greener than D grades? Construct the rest of the ibis code to compute the average NDVI by grade.